Master thesis project

- Dates:

Sep 2018 - May 2019 - Team :

-

Nathan BIETTE

- Technologies:

- Nvidia Falcor API

- Direct3D 12

- Nvidia RTX

- C++ / HLSL

Hybrid Post-Processed and Ray-Traced Depth-of-Field

Overview

The goal of this master thesis project is to develop a hybrid rasterized/ray-traced depth-of-field effect. The depth-of-field (DOF) effect is the blur that can be seen on objects out of the focus plane, in pictures taken with real-world cameras, especially if the DOF is shallow (small zone of focus). But when DOF is rendered as a post-process using rasterization, many artefacts can appear. By using ray tracing I'm trying to mitigate some of those artefacts while keeping the framerate above 30fps.

Hybrid Depth-of-Field Effect Overview

To achieve such an effect, I am rendering a normal image using rasterization and applying a post-processing to the image based on the implementation described in Next-Generation Post Processing in call of duty advanced warfare (slides).

I modified the set of passes applied to the image to render separately to layers: near and far part of the image. Then I apply a ray tracing pass to correct partial occlusion artefacts, mixing the result into the different layers before compositing back the layers together with the initial image.

To implement this set of passes I am using the DXR tutorial code as a starting point and I modified it to implement my custom passes. This is using Nvidia Falcor API built on top of Direct3D 12.

My contributions

-

Surveyed many different DOF techniques presented in scientific papers and industry talks (among which the recent Unreal Engine DOF implementation, see slides)

-

Successfully pitched the new research topic of hybrid rendering to supervisor, leading to the award of a research grant

-

Reengineered the DOF implementation presented by Sledgehammer Games in Next-Generation Post Processing in Call of Duty Advanced Warfare

-

Modified the set of passes to include ray tracing in an efficient way

-

Programmed the passes in HLSL and C++ with Nvidia Falcor API on top of Direct3D 12 and tested the new effect on Nvidia RTX 2080

Project Description

Real-time raytracing, a new tool in the rendering engineer toolbox

Recent advances in hardware-acceleration have turned the idea of real-time raytracing into a reality. Such hardware can solve ray/geometry intersection much faster than on CPU. It enables the use of rays during real-time rendering in addition to classic rasterizer to add effects such as soft shadows, reflections and transparency. Ray-tracing has been used in non-real-time rendering for many years, especially in the movie industry. State of the art GPU will take hours for pure ray-trace based rendering of a frame. Research around ray-tracing and rasterizing techniques has been active for several decades, pushing further the boundaries of realistic image rendering for both real-time and non-real-time applications. However, those two families of techniques have never truly been mixed together, as ray-tracing was too slow for real-time rendering and rasterizing wasn’t realistic enough for non-real time rendering.

Siggraph talk introducing DirectX Raytracing

Now that hardware acceleration enables the use of ray-tracing in real-time to some extent, a unique opportunity appears: to develop new hybrid rendering techniques using the most suitable tool for each effect and the requirements of the scene. Hybrid rendering benefits both the video game industry and the movie industry. In fact, even if hardware acceleration for raytracing still needs to penetrate the mass market and be more widely used in games for better image quality, the movie industry could be the first to widely use this technology. Combining raster-based rendering and raytracing can lead to great visual quality while maintaining an interactive framerate, giving the opportunity for artists to iterate much faster in their creative process.

How to post-process depth-of-field?

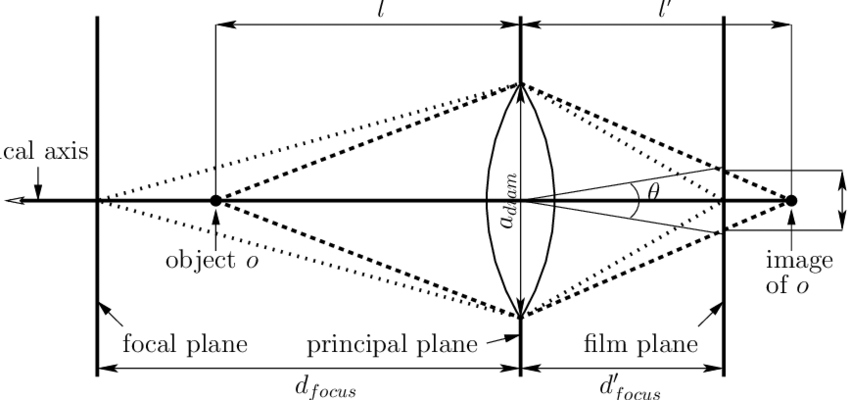

Depth-of-field (DOF) is the blurry visual on a real-world camera when an object is out of the zone of focus. It comes from the fact that light rays are traveling through the lens but are not intersecting exactly on the sensor of the camera. The result is a spot rather than a point on the camera sensor and the superposition of many spots wields the blurry effect. This spot is called a circle of confusion (COC).

In games, DOF is done as a post-process effect: based on the information from the Z-Buffer, the image is blurred according to the computed COC size.

But this blurring pass is complex, and many artefacts can appear if the pass isn’t done carefully. First, the geometric order of the object of the scene needs to be respected and a background object cannot “leak” onto a foreground one while being blurred. Also, because we are blurring the color from the final (sharp) image, we do not have access to the information in the background. Therefore, a thin object in the foreground of a camera shot should look transparent if out of focus but, in the final image, we do not know how the background behind the thin object looks like. This is the partial occlusion problem which prevents us from rendering blurry semi-transparent foreground on top of sharp background.

Moreover, due to the graphic hardware being faster at reading texture than writing it, the blurring is usually performed in a scatter-as-gather manner, where the color of the surroundings is aggregated into each pixel according to the samples COC size. If a nearby samples were to have a COC big enough to cover the target pixel then its color is added to the resulting color of the target pixel. This introduce issue with bokeh shapes and very luminous parts of the initial image being badly rendered in the final image.

If the rendering uses layering and compositing, then discontinuities and other artefacts can appear depending on how the compositing is performed.

How raytracing is helping us?

Raytracing can be used to remove partial occlusion artefact by selectively ray tracing the occluded areas. But ray tracing is expensive and need to be used efficiently. That’s why, we are computing the Z gradient in the Z buffer to detect where this partial occlusion artefacts might occur. Then we can ray trace those specific pixels and add them to the background layer before compositing back together.

The challenge here is to filter the Z-Buffer depth map to find the Z-discontinuities and ray trace only the occluded areas in the region with high Z gradient. To speed up the results, discontinuities in the background can be ignored but discontinuities in the foreground still need to be handled.

Initial depth-of-field implementation in Call of Duty: Modern Warfare

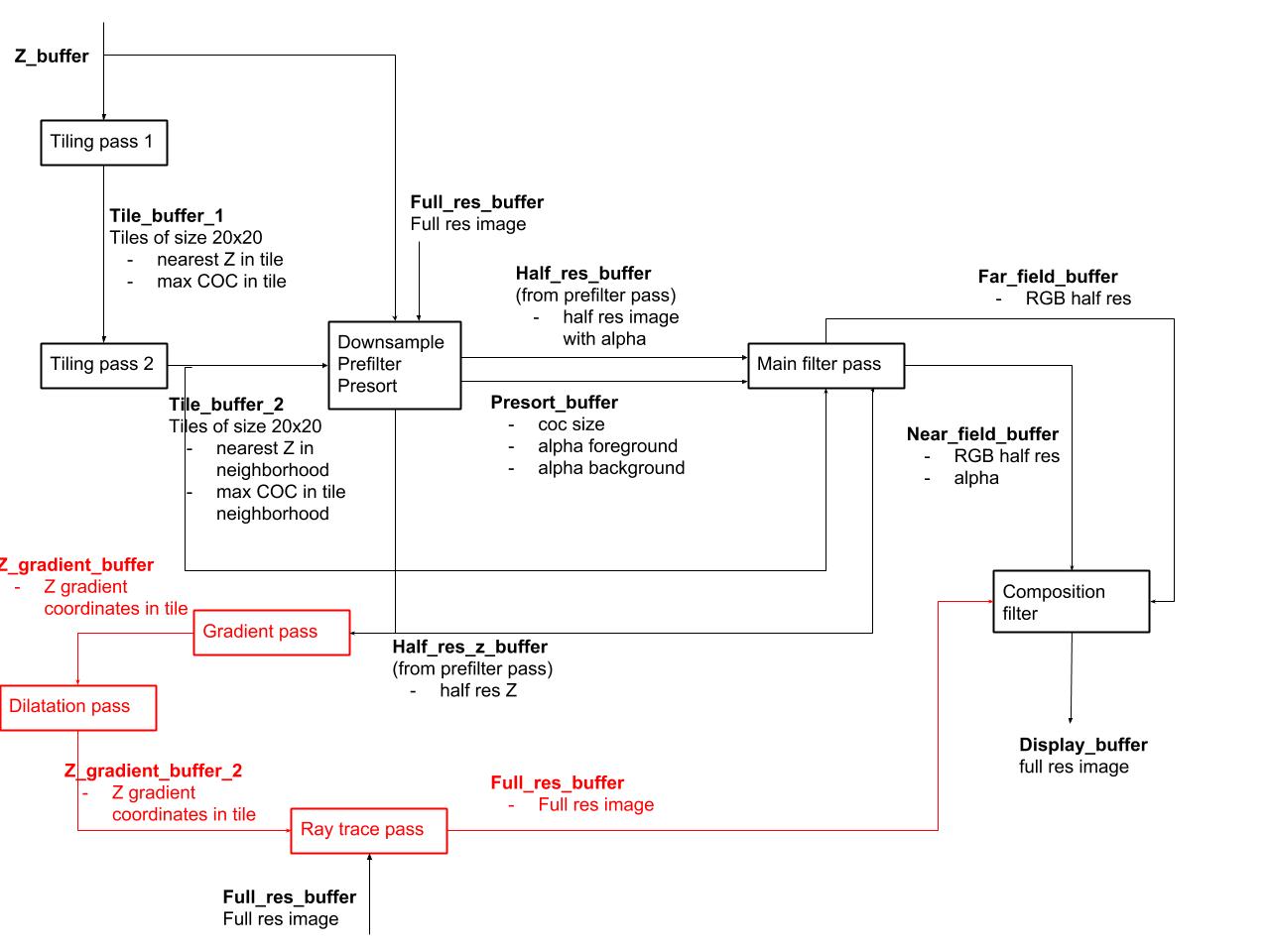

The tiling pass is used to compute the COC and the maximum Z in tiles of 20 by 20 pixels.

The dilate pass is used to expand the tiles in the foreground outwards to cover the area which will be blurred, with semi-transparent foreground objects leaking onto objects in focus or in the background.

Then the presort pass computes the alpha contribution of the samples in both foreground and background layers, relatively to the closest pixel in the tile, according to their COC size. The further from the focus plane a pixel is in term of depth, the bigger its COC will be and the more spread-out the color of this pixel is, therefore the alpha for this color should be smaller (corresponding to a larger and more transparent spot on the camera sensor).

The down-sample pass filters the full resolution image with a 9 tap bilateral filter, effectively wielding a half resolution image to work. It performs a low pass filtering of the image at the same time while keeping the Z discontinuities in the image. The idea is to fill in the gap of the main filter pass by prefiltering while down sampling.

The main filtering pass is a 49-tap circular filter scaled according to the max COC in each tile and performs the blurring by gathering the color of each nearby sample and combine them with alpha-addition using the presorted alpha.

Then a median pass is performed on the result of the main pass which is scaled back to full resolution.

I modified this filter graph to accommodate the raytracing of partially occluded areas.

First, the main filter pass doesn’t render to one texture anymore but two for the far and near field. The selection of the texture to render to is done according to the Z value of each pixels, depending if they are in front of the zone of focus or behind the zone of focus.

Note: The existence of a zone of focus and not just a plane of focus comes from the fact that cells on the sensor of a camera have a defined surface and a COC that is small enough to fit in only one cell will be perceived the same as a perfectly in focus point by the camera.

Then the Z buffer is filtered with a 5x5 Sobel operator in both X and Y direction at half-resolution to get the gradient of Z and the result is written only if the target pixel is in the foreground. That way we can specifically target edges in term of Z of objects in the foreground and render using ray tracing what is behind.

A dilate pass is used to grow those targeted area inward with a 9-tap filter scaled on the max COC size in a 20x20 tile. That way we are expanding the area considered as partially occluded.

Finally, we use the intensity of the gradient to scale the number of rays we are using, shooting more rays closer to the edge, because the further away we are from the edge, the less transparent the foreground object is and the less visible the background is, so we need less ray to get an acceptable result. The result is written in the initial image which is then composited back with the far and near layers.

Follow-up research works

A team of Master students took over the project and improved upon this dissertaion initial implementation.

A poster presenting the improved technique has been published at SIGGRAPH 2020

A full paper presenting the results of this research work has been published at GRAPP 2022